Writing Sample

Technical Stack Which Already Exists

- Goolge Cloud Platform

- Google k8s Engine

- PostgreSQL

- language : Rust

Requirements

Suppose that there is a desire to explore creating and launching a new online service to help manufacturing

companies communicate using photographs.

- The intended customer: large manufacturing companies, with 500+ employees

- Main use case:

- Communication between mechanical engineers, procurement, and factory

- Vague requirements:

- File storage and sharing, where the files are primarily PNG and JPEG photos

- Be able to annotate the photos with circles and start a message thread

- Allow multiple individuals to respond in the message thread

- Vague scenario: a factory worker notices a scratch on a part. They can take a photo, circle the

scratch, annotate it with a question “Is this ok?” and an engineer can respond in a thread, similar to

the Google Docs annotation/suggestion feature.- There is quite a bit of time pressure to show that this software is viable and delivers value to users

- You are the first engineer assigned to this project, and no code has been written yet. Your design

document will drive the resourcing strategy, including engineer reassignments and new hiring.

Design Diagram

Design Details

I must say that I do have some experience with similar systems, so I draw on some.

When I first look at the requirements, I think it is a IM like system, where the main focus is on. While the requirements are never talk about real-time communication, but I think in reality, it is a must have feature. When people meet some problem in work, they always want to get the answer as soon as possible.

Although the requirements do not mention latency or massage loss rate or something like that.

So let’s make it be a IM.

Core : Message Exchange

We need a message exchange center, which is high performance , reliable and scalable, better is push based.

Apache Pulsar is a good choice. As it declares in its website, and could deploy in k8s, which is our current platform; easy to scale out; and it is a push based system, which is good for real-time communication.

But the final reason is that it is proved in my previous experience, related department build a huge real-time signal platform with it, and it works well, both in small range exchange and lage range broadcast.

And of course I have to say if about 500 employees is the only requirement, actually any message queue system could do the job, or even a redis pub/sub system. I tend to choose a more powerful system to leave room for business or non-core requirements, which I will mention this later.

Thread Management

Every one can create a thread, so naturally we can create topic for each thread, and put the messages in the topic. Every one can subscribe to the topic, so they can publish and receive messages in the thread.

But there is a problem, in the beginning, connection is cheap. But as the number of threads grows, and users never want archive the threads(they always just want to keep it), it definitely will cause a problem which is too many topics, and too many connections.

So the solution is build two layer message exchange logic, first layer is the thread management, which is responsible for creating ,

deleting and replay messages of threads and record witch user is in which thread. and the second layer is user layer , which is simply one topic for each user.

- Once a user subscribe to a thread, the thread management will replay all the history messages to the user topic.

- Once a thread receive a new message, it will push the message to all the user topics which are subscribed to the thread.

It’s a little bit heavy, but avoid some problems must occur when the number grows, no matter user number or thread number.

And also has many other advantages, such as advanced permission control. Multiple layers can make more room for complex business logic.

But it also has some disadvantages, such as more complex logic, and exponential growth of the number of messages exchange.

If the core exchange system leaves more room for flexibility, the abstraction can be more adaptable, allowing business requirements to be implemented more easily.

Two Parts

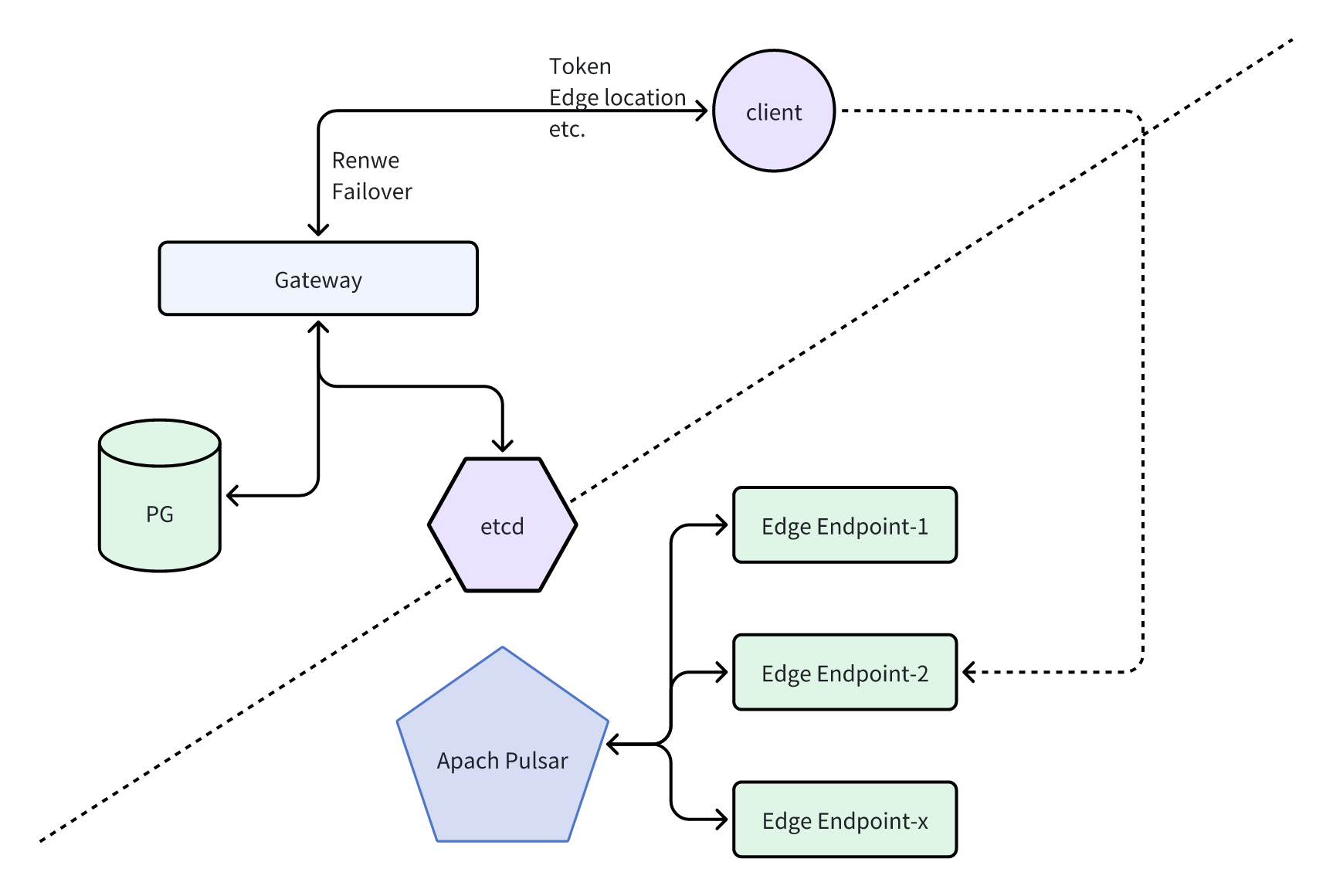

Back to the diagram, here is the timeline of a client build a connection to the server :

- Client request to gateway, with an authentication information, such as a user id and a password.

- Gateway authenticate the user, and return a token and a endpoint location and other information (like protocol).

- Client connect to the endpoint, start a connection( tcp udp quic websocket etc. ) with token.

- Keep a heartbeat or ping/pong to keep observe from gateway, to prevent some situations like if some edge node is down, if user is banned, etc.

So in logically there are two parts of the system, one is the gateway, which is responsible for authentication and connection management,we can call it manage system;

and the other is the message exchange system, which is responsible for message exchange, we can call it message system.

Why do that?

In distributed systems, we generally strive to design self‑consistent architectures.

Imagine three messaging subsystems running in Tokyo, Shanghai, and Mumbai. Each subsystem is fully self‑consistent: it can accept new users on its own and exchange messages with the others across the backbone network. With this design, no dedicated gateway is required, making the overall system more flexible and scalable.

However, in rare cases a region might become isolated—for example, Mumbai could lose its connection to the other regions. The local subsystem would still run and receive messages, but it wouldn’t be able to forward them elsewhere. In that scenario, operators in Tokyo can do nothing but wait for Mumbai to come back online.

A gateway offers a valuable option: when a subsystem is already designated to be decommissioned, we can manage client reconnections to other endpoints more gracefully.

Manage system could be simple, so it can be reliable. The trick is that make control system more simple and reliable,and make message system more complex and replaceable.

There is quite a bit of time pressure to show that this software is viable and delivers value to users

Could the pattern make the time pressure more handleable? Actually, no. It could make both systems more simple,simple means easy to troubleshoot and easy to maintain, and also easy to replace.

Standard

We defined two parts for the system, so when a new feature is required, we can think about which part it should be implemented in.

Not by literal meaning, but by the design intention. This pattern is designed to make the system more flexible and adaptable to changes, but not meaning that control system must be implemented in manage system, or manage system must avoid any massage logic.

There are many details should be considered in real development, keep the intentions is not easy.

Edge Endpoint & Storage

The idea is simple, the more endpoints close to the user, the better performance and lower latency, cloud platforms backbone network always efficiency than client route by themselves.

I know AWS has their own edge network to make static resources more close to the user to reduce latency, so Google Cloud Platform must have similar service, easily to use.

Status Sync

We need to sync the status between manage system and message systems, such as user online status, thread status, etc. Depending on the requirements.

The component must be strongly consistent, and distributed. As a java developer, I would choose Apache Zookeeper, but I think etcd is a better choice for k8s and cloud native environment.

Engineer reassignments & New hiring

I trust current team can handle the pattern, core component is easy to start up when there is not so many users, gateway and endpoint could write in rust , client in react, protocol in webserver. Message persistence and management could ignore in the beginning. So we can fund a team with 2-3 engineers and release a MVP in 1-2 months.

Then, if the MVP is successful, we need some specialized engineers join the team, such as:

- DevOps engineer to manage the k8s cluster and cloud resources. specially the core message exchange system, Pulsar or some other message queue system.

- Network engineer to prove the performance between edge endpoints and client, and build a observation system to monitor the performance latency and message loss rate.

- Specialized client engineer like iOS and Android. Native client is necessary if we want some picture algorithms or customized protocols.

At The Last

After all, the system is just imagining. Many details are not considered, such as security, replication, backup and recovery, etc.

But I think the pattern is a good start, and it can be adapted to many different requirements and scenarios.